Modeling and synthesizing textures are essential for enhancing the realism of

virtual

environments. Methods that directly synthesize textures in 3D offer distinct

advantages to the

UV-mapping-based methods as they can create seamless textures and align more closely

with the

ways textures form in nature. We propose Mesh Neural Cellular

Automata (MeshNCA), a method for directly synthesizing dynamic

textures on 3D

meshes without requiring any UV maps.

MeshNCA is a generalized type of cellular automata that can operate on a set of

cells arranged

on a non-grid structure such as vertices of a 3D mesh.

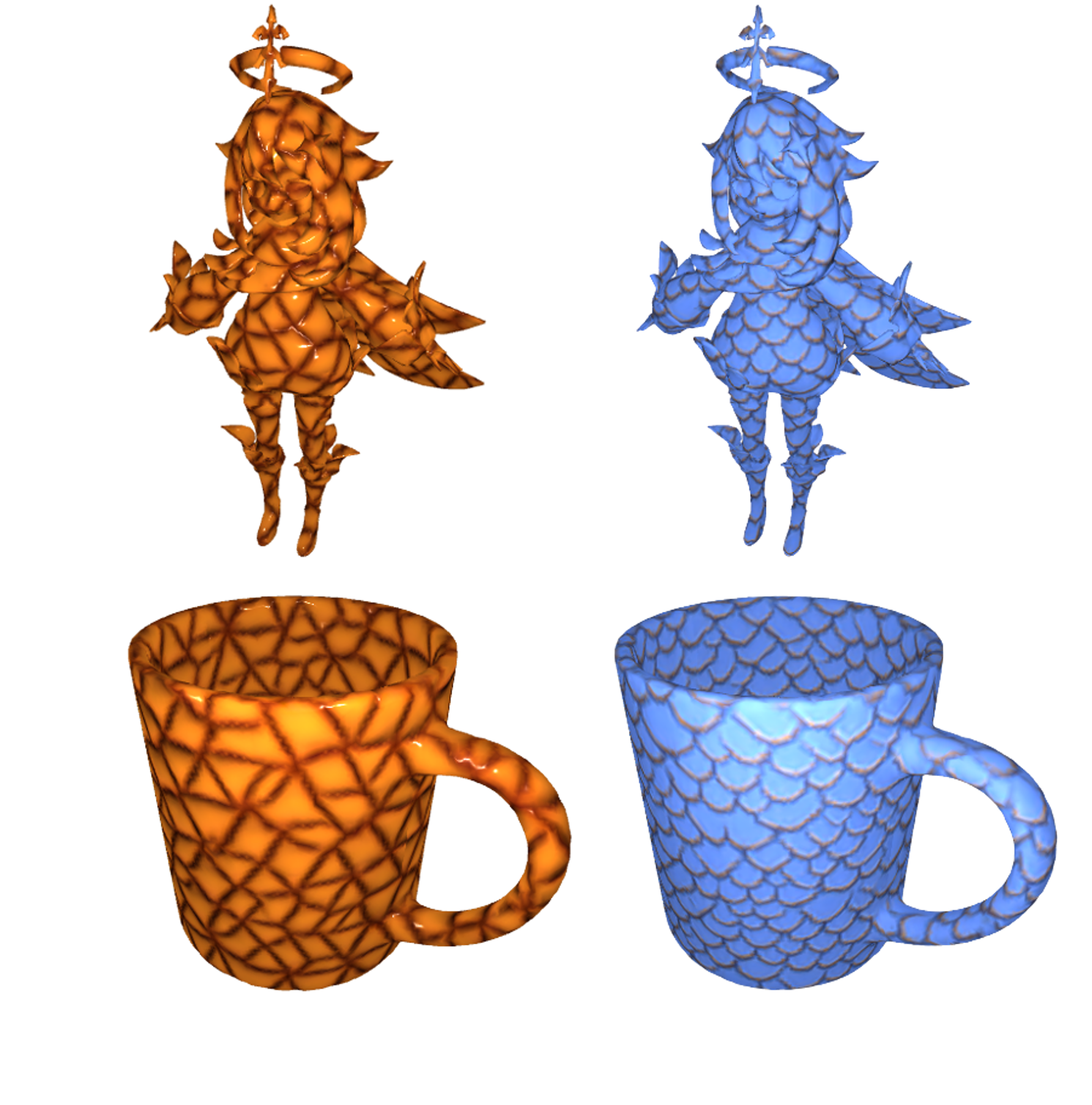

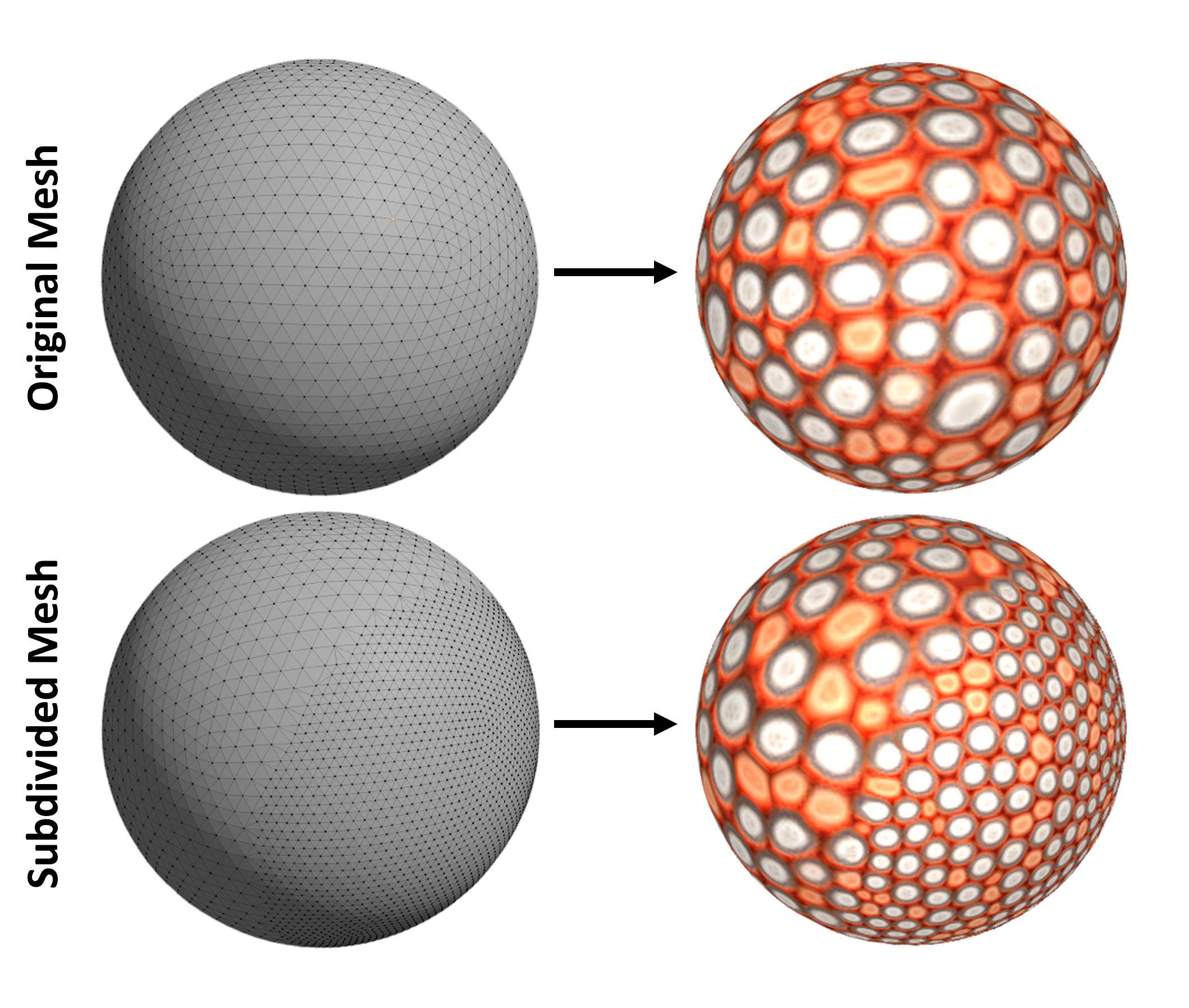

While only being trained on an Icosphere mesh, MeshNCA shows remarkable

generalization and can

synthesize textures on any mesh in real time after the training. Additionally, it

accommodates

multi-modal supervision and can be trained using different targets such as images,

text prompts,

and motion vector fields. Moreover, we conceptualize a way of grafting trained

MeshNCA

instances, enabling texture interpolation. Our MeshNCA model enables real-time 3D

texture

synthesis on meshes and allows several user interactions including texture

density/orientation

control, a grafting brush, and motion speed/direction control. Finally, we implement

the forward

pass of our MeshNCA model using the WebGL shading language and showcase our trained

models in an

online interactive demo which is accessible on personal computers and smartphones.